Update: there is a more recent talk & summary of this content at 7 wastes of data production – when pipelines become sewers.

I realised recently that this is one of the lenses through which I look at the data engineering world, but I had never expressed these (lean) wastes explicitly. This post might be useful for data engineers exploring lean concepts, or lean practitioners trying to make sense of data & analytics processes. This isn’t just a theoretical view; these wastes are real, and have a real impact on organisational success, which I try to quantify. These wastes also impact our ability to do good work, and to enjoy it!

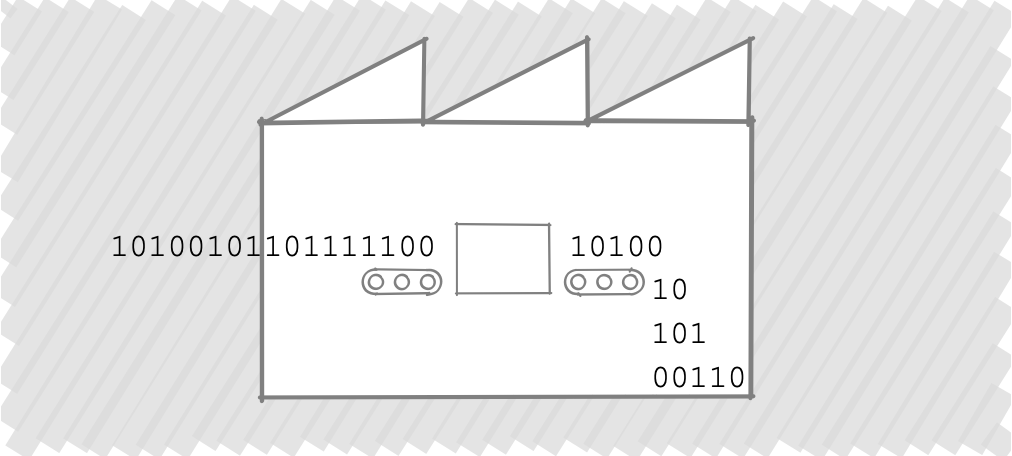

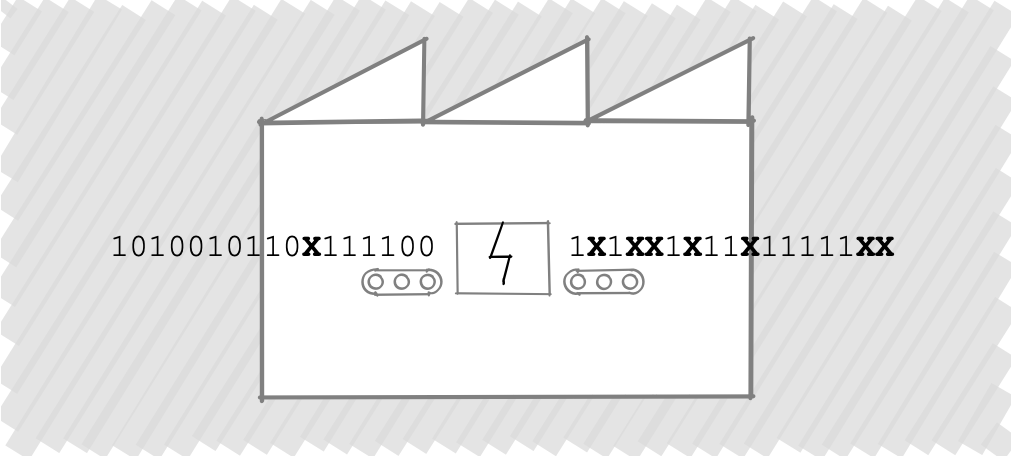

When I talk about data production, I’m talking about building and running a factory that transforms data signals from the world into useful insights, improved operations and great experiences. This means connecting data sources from suppliers through transformations to consumers, who might be customers, team members, or partners.

In this post, I’ll look at lean wastes through the lens of building the factory and running the factory. Building the factory – modifying the processing pathways for data – is a software development exercise. Running the factory – propagating new inputs along processing pathways – is a manufacturing operations exercise, but for data rather than physical products, and hence the manufacturing machines are all software. NB. one team can look through multiple lenses – see data + dev + ops!

Lean wastes (muda) were originally defined with reference to a physical manufacturing, though there are analogues for knowledge work, including Mary and Tom Poppendieck’s mapping to software development. So the translation is roughly:

- the software development analogue for the build phase, and

- a manufacturing analogue with bits rather than atoms for the run phase

Both are illustrated with examples specific to data. The drawings only show bits flowing through a running factory, but you can imagine ideas flowing through a development team as the equivalent for build.

I talk about data products as the end result of this development and manufacturing (or data production) activity, but also considering the complementary design or marketing perspective – i.e., what problems and how well do these products solve for a consumer? (regardless of how they are made) This brings us to…

Overproduction

Overproduction is delivering things consumers don’t need and haven’t asked for.

| Build | Unnecessary products, over-designed products For instance, a report that no-one will read, a 3D widget where a table will do, or a unified data model that doesn’t suit any one consumer. |

| Run | Unused products A report that people stopped reading long ago. A data set no-one ever accesses. |

The consequence of overproduction is that productive capacity is consumed with no business impact. These products are useless and prevent us creating value.

Considering some studies have found 50% of product features are rarely or never used, the cost of overproduction may be 50% of your data budget, but many organisations have very limited visibility of overproduction in data.

Overproduction is with reference to finished goods, but until finished, they are …

Inventory

Inventory is partially completed work that causes a drain on resources while embodying little or no realisable value.

| Build | Work in progress Batching up development effort on data ingest activities, or platform features, without validating the use cases that motivate this data or functionality. |

| Run | Incomplete pipelines (data not connected to consumers) Data delivered to a data platform but not being used. |

The consequence of inventory is that no value is realised from effort to date, and as a result, unbounded effort may be expended before delivering value.

The cost that can be sunk into building and populating a data platform that is not connected to consumers (representing data production inventory) is, for all intents and purposes, unbounded. Without connecting to consumers, very large initiatives could reach their conclusion with substantial data inventory (which ironically, until close to that point, might be considered a success measure), but marginal to no business value delivered.

A possible cause of failing to deliver finished goods from inventory is the additional effort associated with …

Over Processing

Over-Processing is doing more work than is necessary to deliver on an objective.

| Build | Reinventing products, working with untrusted data Duplicated, divergent reports. A unified data model that doesn’t suit any one consumer. Excessive logic to manage poor data quality. |

| Run | Correcting errors from upstream, propagating redundant data Filling missing data and correcting schema violations with best guesses. Passing on data that isn’t valuable downstream. |

The consequence of over-processing is the expenditure of unnecessary effort to realise value. This may feel like everything is harder than it should be.

Any code that exists to correct errors downstream of a source is over-processing, so is any duplicated reporting. Consider how much of this over-processing you may be doing, and what might change if you were to measure this.

Work must move between processing stages, but in doing so, we should minimise …

Transportation

Transportation is moving products around in a way that is costly and may cause damage.

| Build | Handoffs between siloed teams Source app team → ingest team → platform team → analytics team → consuming app team… each team loses context from the last. |

| Run | Data replication without reproducibility Creating unnecessary backups because systems aren’t trusted. Copying Excel files. You can damage a digital copy by losing its provenance. |

The consequence of transportation is expending further additional effort that may reduce quality. There’s no clear single place to find what you need, and the more it moves, the less you trust it.

How much time have you lost due to misunderstanding between teams or to establishing the provenance of data? It may lower productivity or may be catastrophic if auditibility is sufficiently degraded. This is the cost of transportation in data production.

In addition to transportation, the act of processing may include unnecessary …

Motion

Motion is extra activity which doesn’t add to the product, and additionally creates opportunities for defects, and takes a toll on workers.

| Build | Context switching For example, a dedicated data ingest team working across multiple sources (work in progress), which may also frequently break (incident toil). |

| Run | Manual intervention or finishing of products Copy this here, rename file X and save over there, run script Y, … |

The consequence of motion is that work is complicated, in a way that is bad for people. Every job requires more actions than it should.

How much time and energy do you lose to picking up and putting down work – this can increase dramatically as the number of concurrent tasks increases – including switching to manual intervention in data production? This is the cost of motion.

All of the above reduce the flow of build or run to an extent. Collectively and in interaction with batching up work, they cause …

Waiting

Waiting occurs when people or resources aren’t ready to pick up work as it arrives.

| Build | Delays due to handoffs between siloed teams, long feedback cycles in development Waiting for requirements or feedback on deliverables. Exacerbated in data-intensive applications by functionally-specialised handoffs (see transport), long running batch jobs, and a promote then test approach. |

| Run | Lead time to discover data, and from business event to insight or action No-one owns this data or can tell you definitively if it exists, what’s in it, and where to find it. Reports come on a fixed schedule set by processing capabilities, not business needs. |

The consequence of waiting is that business value realisation is delayed, in an environment where value decays rapidly. We might summarise this as: it would have been nice to have this data yesterday.

Where hand-offs occur between teams, tasks may take 12 times as long to complete, as a median measure, and much longer in extreme cases. Cascading scheduled batch jobs with buffers and retries due to uncontrolled variability and quality issues can quickly add up to insights lead times measured in weeks.

The factors above contribute to and are also caused by the introduction of …

Defects

Defects are the failure to do something, or the failure to do it right.

| Build | Defects in processing code Query specifies ‘m’ for minutes instead of intended ‘M’ for month. |

| Run | Defects in data produced People get the wrong emails. |

The consequence of defects is that the organisation increases risk exposure, while reducing consumer value delivered, and creating effort to remediate. Thus they have the potential to damage business and create yet more effort.

The cost of defects can be catastrophic, especially when related to personal information. If defects cause significant ongoing toil, reducing defects is a major lever for increasing productive capacity (eg, if defects are ~30% of capacity, the marginal improvement in productive capacity is ~50%).

Conclusion

We can see these wastes are inter-connected and sometimes mutually reinforcing. Look out for these wastes in your work with data; find your own examples. I have found recognising these various wastes and being able to quantify their potential impact helps identify and prioritise improvement efforts. There are approaches and solutions to reduce these wastes, but I won’t address any of those here. Instead I will just encourage you to take some time to understand the problem; there’s a lot you can do with knowledge of waste in data production to define and drive change for the better.

Thanks to Ned Letcher and Yekaterina Khomyakova for feedback on these wastes, which were included as part of their presentation on Data Mesh for the 2021 LAST Conference Melbourne.