This was supposed to be a regular digital project that consistently made progress towards the objective… right? Problem statement, some analysis, build a solution bit by bit, deploy, …, nice linear progression… but no… we were wrong!

It turned out to be an R&D project. Often R&D projects change direction and sometimes seem to go backwards. Is this a problem? What should you do if it is? Let’s explore.

How to spot an R&D project

It’s in the name. Any work that requires substantial research, discovery or creation of knowledge to inform the development of solutions.

But what’s in a name? You might not realise that substantial knowledge gaps existed when starting the project, and they might only become apparent during development, i.e. they were unknown unknowns. Or it might be hard to demonstrate to stakeholders that there are substantial knowledge gaps within your organisation, especially if the solution is seen as subjectively simple, or if there is related art to reference.

The research could be in data science/ML/AI, or could be user research, market or technology scans, etc. Anything where we’re doing something new and have a lot of known unknowns.

In any case, you need to be prepared to identify and effectively communicate knowledge gaps if and when they become apparent. If it’s not widely recognised as an R&D project from the outset, then the first sign outside the project will probably be that delivery has slowed, stalled, or even gone backwards.

Should you act, and how?

These signs are a natural part of R&D projects and sometimes just part of the natural variability of R&D work. If you have the right ingredients, it may be nothing to worry about, but if it is particularly uncharacteristic or persistent, or if the team has gaps, then it warrants further investigation.

Investigation may determine the team lacks skills, isn’t following good practices, is struggling with poor tooling, is hampered by organisational dependencies, or is affected by other environmental factors. The right management intervention will depend on the combination of causes, but none of these causes per se require R&D management.

In the case your investigation finds that progress is hampered by lack of knowledge–a team that doesn’t know the right things to do–alone or in combination with the other factors above, then you should consider management of an R&D project, as discussed below.

Management of R&D projects

As a leader or manager of an R&D project, which is defined by uncertainty, what can you do to help? And be confident that you are helping, rather than just acting for the sake of acting? Some interventions may have no effect or may even be counter-productive, and it may be hard to see any immediate response to your interventions.

The good news is that, as a leader or manager, you can have a big positive impact on R&D projects, even without deep understanding of the problem space or solutions, and even when it takes a little while to see the effect of your actions. Leaders and managers can assess the health of R&D projects and communicate with stakeholders using key leading measures, and act along these dimensions with their teams to improve the health of R&D projects.

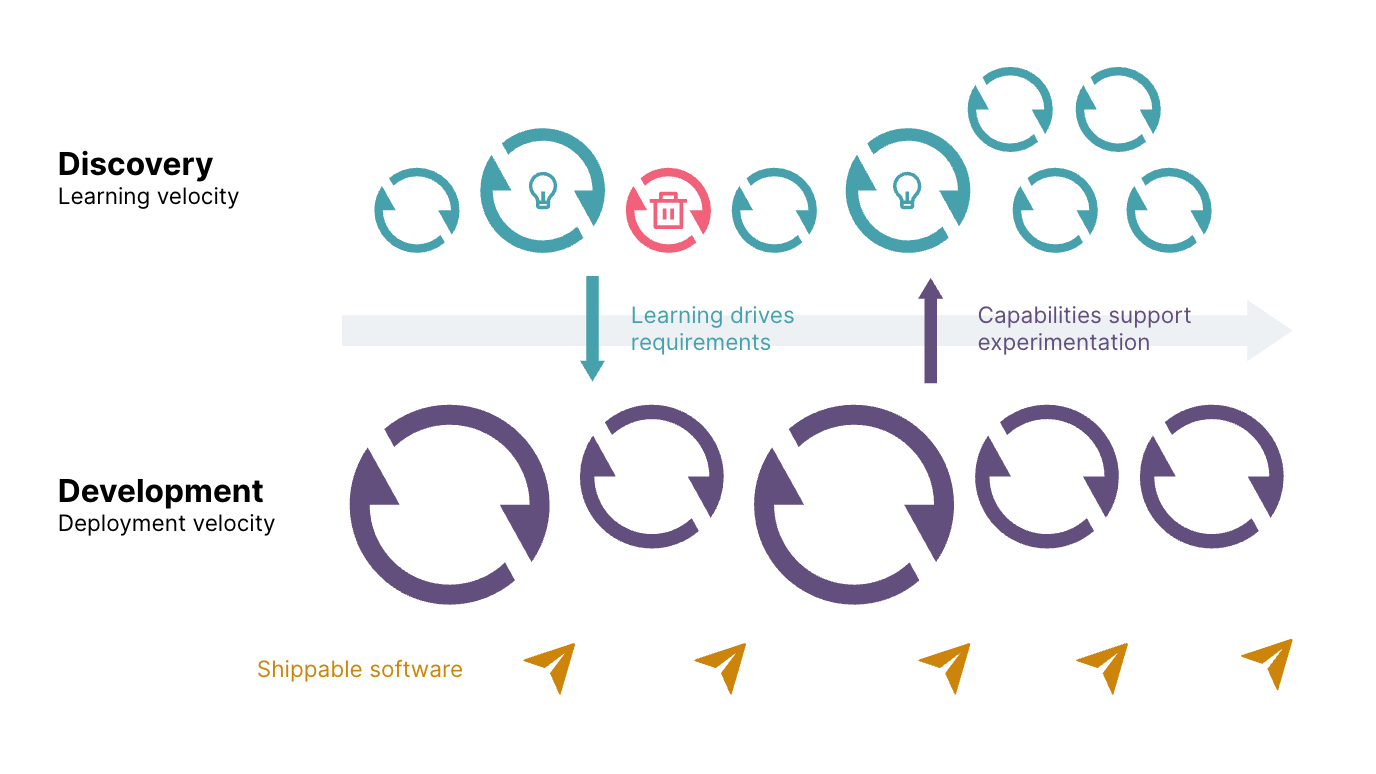

The precondition for the approach I describe below is that your work is structured along the lines of dual track delivery, with a track for research and a track for development, and coordination between the tracks, as described in R&D burn up. This also explains why regular agile development reporting and management falls short in the case of R&D projects, for both teams and their stakeholders.

Then management considers the balance of the following three key dimensions:

- Experiment portfolio diversification

- Rapid experimentation cycles

- Responsively exploiting successful experiments

If you can do these things well in R&D projects, you set yourself up for success, or rapid identification and effective mitigation of issues.

Experiment portfolio diversification

Experiment portfolio diversification means we have lots of options for next steps when we’ve exhausted the possibilities of our current work. It means we don’t waste time between one experiment and the next before doubling down on promising avenues of investigation or pivoting to alternatives. It may also mean we can run more experiments in parallel, if diverse experiments are not interdependent.

Research in practice as well as etymology is based on search, search of a space of possibilities. No Smooth Path to Good Design describes the importance of user-centric design processes for searching a space of potential value creation, but the search and learning activity described is equivalent in data science (or ML/AI) projects or any other research activities.

In R&D, we’re seeking to find a solution design that produces the best (or at least viable) performance for a problem in a given context. The design-performance function we’re seeking to optimise is highly multi-dimensional and non-linear, so any search may well to involve many pivots (this is also referenced in my talk A gentle introduction to embeddings.) An insufficiently diversified experiment portfolio risks a perfunctory search that misses these local maxima that create outsized value.

Measures of experiment portfolio diversification include a simple count of experiments in the backlog, as more ideas is likely correlated with more diversity, a count of lines of investigation, or categories, and a count of experiments per category. Dave Snowden and the Cynefin framework also recommend including naive and contradictory experiments.

Rapid experimentation cycles

Experiments produce evidence to support or invalidate a hypothesis, and they only “fail” when results are inconclusive and nothing is otherwise learned. To reduce the risk of inconclusive experiments, focus on conducting experimentation in rapid cycles. This helps reduce the scope and complexity of experiments, and helps us to test just one thing at a time.

This can aid in diversification, too, as we tease out orthogonal components of larger experiments, which may also allow parallel execution of experiments. It also allows us to continuously re-prioritise the portfolio of experiments based on latest information (from both tracks) and to run experiments just in time, minimising effort devoted to low-value experiments.

Cycle time is the measure for rapid experimentation. Stakeholders will also appreciate the clarity with which you are able to communicate your current state of knowledge (with respect to completed experiments and the remaining backlog), the control permitted by re-prioritisation, and some level of certainty about how quickly knowledge might advance in future based on past progress.

Responsively exploiting successful experiments

A responsive delivery pathway for successful experiments is key to minimising the lead time to value delivery in enhancing business processes, product performance, or customer experiences. Responsive development means iterative rather than incremental delivery of R&D projects. We can’t work incrementally, or piece by piece to a known end state, as the end state is not known. Iterative development reduces the risk of a poor solution fit, as well as accelerating value delivery.

My talk Leave Product Development to the Dummies describes both (a) how simulation can be used on the discovery track to iteratively de-risk other product development activities and (b) how a simulator can itself be built in an iterative fashion, which is also demonstrated in Playing Games is Serious Business.

A key measure of iteratively delivering value from experimentation is the lead time from experimental results to seeing some consequent impact on a business process or customer experience. This encompasses both the solution development activities informed by individual experiments, and the tooling and practices used to rapidly and confidently deploy small changes of any nature.

This continuous delivery capability on the development track can also reduce cycle time for experimentation on the discovery track, reducing error-prone manual work by automating the provision of environments, experiment setup, execution, data collection and analysis.

The lead time for change in the solution to impact a business process, product performance in the wild, or customer experience, from when the change was determined by the result of an experiment, is the key measure in this case.

Creative stakeholder engagement

Great, now you’re comfortable managing the uncertainty of R&D projects, but what about your stakeholders? They may not have experienced this type of work before, and may be very uncomfortable when they can’t connect early prototypes to the finished product, or when progress is not monotonically towards the goal.

This is a human and creative challenge to solve. Just telling stakeholders what is happening may not be sufficient. Much better if they can actually experience the R&D process themselves. The more we can engage stakeholders, the more they can actively contribute to improving the three management levers, such as suggesting, prioritising and accelerating experiments with domain expertise, or facilitating rapid deployment through knowledge of processes and influence. With the hygiene factors under control, stakeholders who can be further engaged playfully will be better able to contribute to an effective problem solving environment in the team.

Solutions I’ve used to good effect include serious game playing, where we allowed stakeholders to play with “thin slices” of the finished product during iterative development, and narrative visualisation to find appropriate cultural metaphors for the technical work being undertaken. You should think creatively in your scenario, with consideration of the message you want to convey, and with knowledge of your stakeholders, about how to best engage them, and don’t be afraid to experiment!

More on ML/AI and data

You can find more on product practices, engineering practices, team dynamics and organisational structure to facilitate good R&D work in ML/AI teams in Effective Machine Learning Teams. We cover dual track delivery in the chapter on product practices and, while we highlight the more experimental nature of ML projects, we don’t go into the level of detail on R&D management that I do in this post. Perhaps something like this will feature in the second edition!

If data is a major component of R&D work, you might find other sources of organisational waste to eliminate in 7 wastes of data production, and in the process drive shorter cycles of experimentation and reduce lead time to deploy results.

What to expect next

R&D projects are some of the most fun projects and present great opportunities for growth and creativity in professional environments. While the uncertainty and variable progress can be stressful for teams and stakeholders, good R&D practices supported by sound leadership and management practices can take a lot of the guesswork out of what to expect. These approaches I’ve shared won’t guarantee success, but they will reduce avoidable issues and they will allow you to respond more appropriately and confidently, and in a more timely manner, in a variety of circumstances.

Expect more from your next R&D project!

Comments

One response to “What to expect when you weren’t expecting an R&D project”

[…] Leaders and teams can devote their efforts along these three axes, which are explored in more detail in the follow-up post What to expect when you weren’t expecting an R&D project. […]